Harnessing Real-Time Data: The Unveiling of ARMS’ LSTM Decision-Making Process

In the realm of digital currencies where market trends are as volatile as a stormy sea, the success of investment strategies often hinges on the ability to swiftly and accurately process real-time data. At Digital MZN, our software ARMS has been designed to do precisely that, utilizing a combination of artificial intelligence, machine learning, and a critical component — Long Short-Term Memory (LSTM) netwo

1. LSTM Networks: Unraveling the Magic

LSTM networks are a significant advancement in the evolution of neural networks. They are engineered explicitly to tackle the ‘vanishing gradient’ problem that plagues traditional RNNs.

This issue arises during the network’s learning process, known as backpropagation, when passing information from later stages to earlier stages of the network becomes challenging. The early layers of the network learn at a much slower rate than the later ones, causing the network to ‘forget’ earlier information.

For a visual understanding of this, check out the comparison between LSTM and traditional RNN:

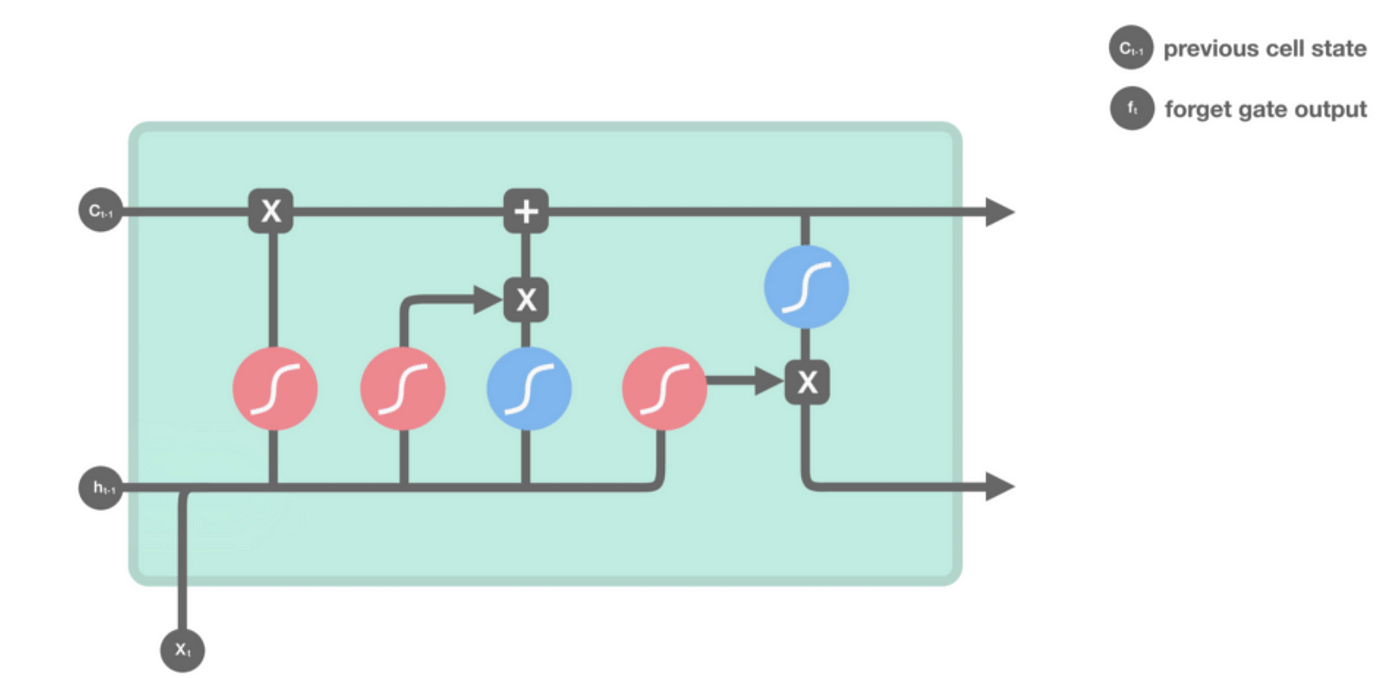

The LSTM network structure includes a unique component known as a ‘cell state,’ which acts as a conveyor belt, transmitting relevant information across the network. This cell state works in conjunction with three ‘gates’ — the input gate, the forget gate, and the output gate.

- The input gate decides what information should be stored in the cell state

- The forget gate determines what information should be discarded

- The output gate defines what information the cell should output.

This intricate structure allows LSTMs to remember and utilize information over extended periods effectively, making them ideal for tasks like predicting future market behavior based on historical data.

2. Understanding SHAP (SHapley Additive exPlanations) Values

SHAP values provide a powerful framework for interpreting machine learning models. Named after the Nobel Prize-winning economist Lloyd Shapley, SHAP values derive from game theory and offer a unified measure of feature importance.

Imagine you are playing a team game.

Each player in your team contributes differently to the final score. Some may score many points, while others might help by defending or passing the ball well.

Now, wouldn’t it be great if we had a way to figure out just how much each player helped in achieving the final score?

This is where SHAP values come in, but for machine learning models instead of games.

In our machine learning model, think of each feature (like market capitalization or Twitter sentiment) as a player in the team.

The SHAP value is like the score that tells us how much that player (or feature) helped to make the final prediction (the team’s final score).

Just as we can add up all the players’ scores to get the team’s final score in a game, we can add up all the SHAP values to understand the model’s final prediction. This helps us see clearly how much each feature helped in making the final prediction.

Now, let’s see how SHAP values help us understand our model better.

3. Significance of the Most Recent Hour: The SHAP Value Perspective

In our LSTM decision-making process, we found that the most recent hour’s data carries the most significant SHAP value.

This underscores the critical role of real-time data processing in cryptocurrency trading.

Despite the LSTM model considering a full day’s worth of data, the SHAP values reveal that the model assigns the highest importance to the information from the last hour when predicting the next hour’s market movement.

This is a reflection of the LSTM’s ability to selectively remember and forget information, adapting dynamically to the ‘importance’ of certain input data over time.

4. Balancing the Variables: Interpreting SHAP Values

Every variable, from market capitalization and trading volume to Twitter sentiment, carries a different SHAP value.

These SHAP values essentially signify the weights that the model implicitly assigns to each variable based on its contribution to the prediction accuracy.

For example:

If market capitalization carries a high SHAP value for a particular prediction, it suggests that a change in market capitalization had a significant impact on the model’s output.

Conversely, a low SHAP value for Twitter sentiment would mean that changes in sentiment had a minimal effect on that specific prediction.

The beauty of SHAP values lies in their instance-specific understanding of how the different variables influence the model’s decisions.

This ability to interpret and understand the decision-making process allows us to navigate the turbulent waters of cryptocurrency markets effectively.

In conclusion,

the ARMS system’s sophistication lies not only in its effective utilization of LSTM networks and its strategic choice of the lookback period but also in its ability to interpret and understand the decision-making process using SHAP values.

As we continue to refine and enhance ARMS, we look forward to sharing more insights into our journey in leveraging

AI and machine learning to revolutionize cryptocurrency investing. Stay connected for more technical deep-dives and updates on our progress!

- we will discuss Accuracy to Long Term Performance of ARMS.

Thank you for following ARMS’ growth.

We look forward to empowering you to take control of your assets and participate in the future of finance. If you want to know more about the project you can access our Data Room

Written by: Francisco Pinto — ARMS CTO